How We Will Use Photomicrographs in Virtual Reality

Imagining

the applications of head mounted displays

Josh

Shagam, USA

March

2016

Acrobat® PDF version also available.

It’s hard to miss the much-hyped wave of consumer-focused virtual reality technology headed our way. I’m not going to try to sell you on virtual reality with marketing promises or science fiction scenarios. There are dozens of write-ups as of late that can describe the joys of losing oneself in a 360-degree experience, others that can break down the size of the market and the billions of dollars being poured into VR tech companies big and small [1]. Virtual reality is in a unique position because “it has gotten big before having a major product...this hasn't really happened before on this scale,” and it has given me time to consider how it could ascend beyond the trappings of being a solution in search of a problem [2]. There's no question that we are going to hear a lot about virtual reality this year and after. At the end of this article I have included a selection of thought-provoking articles that address broader-reaching themes related to VR. Here, my intention is to sketch out those microscopy-related virtual reality ideas that have the most staying-power.

I’d like to speculate on how this new visual medium and content delivery platform will mesh with the images we make with microscopes. What does the new virtual reality hardware offer, in general terms? I'm primarily thinking about the Oculus Rift and HTC Vive, two products that will be available by the time you read this. Each company's offering includes an OLED head mounted display (HMD) and a slew of sensors for positional tracking and motion controller input. The other side of the coin is mobile VR (Google Cardboard, Gear VR) that offers a slice of the same experience by employing smartphones, without the positional tracking or motion controllers. These cheaper avenues of virtual reality are capable and well-suited for viewing experiences, but they should not be considered as feature-rich as the Rift and Vive products. The utility of consumer virtual reality hardware in the context of viewing photographs offers us:

The ability to convey image scale in a psycho-physical way not possible with traditional desktop displays

The ability to “look around” and move closer by leaning or walking closer, rather than zooming or scrolling with a mouse (i.e. intuitive viewing and interaction)

The promise of collaborative, avatar-aided, remote learning/reference environments

Understanding this, it is my opinion that the new generation of affordable virtual reality is a viable platform for scientific media. It should be considered concurrently as a new display type in the evolution of CRT, LCD, etc. and as a virtual environment within which social interaction, exploration of physical space, and communication of visually rich content is realizable. Here are my three notions for how we will use consumer VR hardware, starting now, in connection to photomicrography.

Concept

#1: The Pathologist’s Virtual Microscope Wall

As

an instructor of photomicrography at Rochester Institute of

Technology, I introduced a curriculum that embraced the “virtual

microscope” whereby students created extremely high resolution

composite photomicrographs and shared them online using the

interactive Google Maps API (I wrote about it in the February 2013

issue of Microbe Hunter Magazine) [3]. I

saw commercial products moving in this direction with all-in-one

slide scanning units offered by Zeiss, Leica, and other companies

with the software infrastructure to match. These commercial solutions

and the homebrew, do-it-yourself stitching techniques alike generate

very high resolution image files with gigabytes of visual data for a

given specimen. This creates a display conundrum: how do you make use

of all those pixels? How can you share them with others efficiently?

Virtual microscopy generally means using a computer network and an

interactive user interface to pan, scroll, and zoom through widefield

(often called whole-slide)

images made from real, physical stained tissue samples on glass

slides. It calls up only the image data visible on-screen at any one

time to minimize the bandwidth and graphics processing load. This

concept is most commonly realized through proprietary hardware and

software used by pathologists, though research has shown that with

current solutions, “diagnosis

takes 60% longer with a virtual slide interface…the interface

that commercial virtual slide systems provide is poorly suited to

navigating gigapixel images.” [4].

The idea, then, is to adapt current virtual microscopy solutions into a true virtual reality environment that takes advantage of its unique abilities. It is an amusing misnomer that the term virtual reality microscope has been used for years, but it illustrates a strong connection between concept and desired function. Not only are we interested in simulating the visuals of looking through a microscope, but we also want to mimic and improve upon its interactive and intuitive function relative to the real-world experience. A virtual reality microscope interface can provide a faster, fluid and cost-effective tool for modern pathology.

In my research I have come across projects like KeckCAVES and the Pathology Powerwall that illustrate avenues of interaction with scientific image data. KeckCAVES is not a head-mounted-display solution but rather a three-walled room on which projectors respond to the user's position to create the illusion of three-dimensional imagery in real space [5, 6]. This UC Davis project is specifically interested in “active visualization in the earth sciences” and has even developed a 3D visualizer for confocal microscopy data. Three-dimensional reconstructions of traditional microscope slides are also being evaluated, as described in the research paper Toward Routine Use of 3D Histopathology as a Research Tool [7].

The Pathology Powerwall, developed at the University of Leeds, sticks with two-dimensional displays but offers significant insight into real-world use and capabilities of a virtual microscope interface [8]. Started in 2006, the project offers a software solution for multiple desktop monitors or a wall-spanning array of side-by-side monitors (uncreatively termed Wall-Sized High-Resolution Displays or WHirDs). All of this is designed to serve up gigapixel whole-slide images that are commonly captured with automatic slide scanning devices. The user has a large display to inspect the image data while keeping a thumbnail directory and navigation panel nearby for a streamlined workflow. In the case of the Powerwall setup specifically, the idea is extended to allow for a small group of people to stand in front of an image and identify areas of interest as naturally as pointing to a map pinned on a wall. The Powerwall is ideally suited to academic environments, while the Leeds Virtual Microscope 2 software also developed by the research team caters to working pathologists using conventional desktop monitors [9]. Studies have shown that both avenues of displaying high resolution photomicrographs may eventually allow for faster diagnoses and function as valuable teaching aids [10, 11].

While these approaches are valuable, they are inaccessible to the homegrown microscopist: until now, an affordable and user-friendly alternative has not existed for those interested in working with microscope images using display solutions beyond the desktop monitor. The utility and capabilities of true virtual reality using modern HMD hardware could invoke the maturation and democratization of this established virtual microscope platform.

These exciting ideas are not free from fundamental hurdles, three of which I will address here: calibration, resolution, and accessibility. While all three will benefit from future development, they cannot be overlooked as obstacles in the present.

The first hurdle is display calibration if the work is to be diagnostic in nature. Many displays used in medical imaging are held to the Digital Imaging and Communications in Medicine (DICOM) standard to ensure equal probability of detection (read: optimizing the system so a human viewer has the best chance to observe image data accurately) [12]. There is also an ICC Medical Imaging Working Group functioning within the International Color Consortium that actively seeks to address color reproduction [13]. Inaccurate or otherwise uncalibrated displays cannot be used for diagnostic evaluation, depending on the area of medicine. As someone familiar only with using colorimeters for professional photography editing displays, I don’t know how the devices can be adapted to measure head mounted displays. There will also need to be support for custom Look Up Tables (LUTs), presumably through the manufacturers’ drivers, to allow for accurate, specialized handling of the image signal fed to the headset [14]. This is less imperative if the use is primarily academic but still important in the grand scheme of using the devices in professional contexts [15].

Resolution is another problem. While the first wave of modern VR HMDs sport cutting-edge, high resolution OLED displays for their size, the net result is a fairly low 1080 by 1200 pixels per eye for the wide field of view across which those pixels are perceived. Reports describe the visual fidelity of both the Vive and Rift as impressive, but it does mean that viewing photographs scaled to appear across a virtual room will be rendered using a fraction of that resolution. Some early users describe a “screen door effect” where the dark spaces between pixels are noticeable in a way that Retina displays and 4K TVs have spoiled us away from. This characteristic may reveal its shortcomings when the viewer attempts to parse fine detail from a small field of view. As a counterpoint, I offer that moving closer to the image in the virtual space (thanks to motion tracking) can counteract this. Unlike leaning into a desktop monitor, leaning into a virtual wall rendered in VR can dynamically increase the apparent magnification of the displayed image. The screen resolution is still fixed, but the software’s behavior can provide a unique scaling response based on an intuitive user movement. Additionally, there is reason to believe that high screen resolution matters less when an inherent behavior to virtual microscopy involves zooming in on the image data within the software. A study conducted in 2014 investigated display resolution and its impact on virtual pathology diagnosis time. It found that a higher resolution display (or a set of displays) can make it easier to identify diagnostically relevant regions of interest quickly when viewing whole-slide images at lower magnifications. However, “when a comprehensive, detailed search of a slide has to be carried out, there is less reason to expect a benefit from increased resolution.” [16] Further experimentation and studies with VR HMDs will help to address my concerns.

Finally, the flexibility of current virtual microscope slide viewing— anyone with a web browser and internet connection can gain access— is lost when you introduce the mandatory VR hardware. In other words, virtual reality will not be the solution for every existing or desired application. A web browser tool like the Google Maps API provides a nearly universal platform that we can access on our phones, laptops and desktop computers with little difficulty. Virtual reality hardware has an uphill battle before content access will be ubiquitous. For now, the resource will be relatively exclusive.

Concept

#2: The Science Educator’s Collaborative Annotation Library

A

common reservation about virtual reality is how physically and

socially isolating it may become. Donning a headset completely

eliminates the possibility of making eye contact with someone that

might be sitting right in front of you, so naturally we wonder if the

hardware will be destructive to interpersonal dynamics. However, the

use of avatars (digital representations of ourselves) in virtual

space may circumvent this. It is beyond the scope of my intentions

(and expertise) to extol the value of avatars in general, but I can

speak to their potential role in photomicrography. Professor Jeremy

Bailenson, director of Stanford University’s Virtual Human

Interaction Lab and pioneering researcher on virtual reality, has

conducted numerous studies and coined the term Collaborative

Virtual Environments

or CVEs to describe avatar-based multi-user experiences [17].

I believe that CVEs are a natural fit for science education when they

incorporate photomicrography and other visual media.

Imagine a virtual classroom where students and instructors can collaboratively view, interact, and annotate microscope images. Much like virtual microscope curricula in place today, students can build a reference database of notes, observations and markers on top of virtual microscope content [18]. By using a system borrowed from community forum platforms using voting to promote preferred or correct content, homework answers can be crowdsourced by classmates and policed by the group’s assessment of their answers. Importantly, students will feel like they’re working in the room with their peers. Multiple documents or images can be displayed and studied in the environment. The instructor can hold “live” hours or opt to prerecord audio and avatar motion for asynchronous playback [19]. If you have been a student in recent years, you undoubtedly know the versatility, necessity and power of cloud-based, collaborative tools like Google Drive. Virtual reality applications can provide the same features built around visual media content rather than word processing while also offering a greater sense of social presence.

Concept

#3: Fine Art Appreciation, Viewing for Pleasure & Social Media

Sharing

We

will see this concept realized immediately: simple image viewing. It

is a logical first step for online news outlets’ image

galleries, fine art publications, and visual media communities like

500px and Flickr. It is notable that Facebook (parent company to

Oculus) has already developed and implemented a video format and

streaming engine for embedding 360-degree video content into

Facebook’s newsfeed [20].

I don't believe that virtual environments will threaten traditional

photography or video, but we will want ways to experience both in VR

and a passive experience is the most familiar. Consider the last time

you viewed an image slideshow on the New York Times or a similar

website. Now recall the last time you went to an art museum or

gallery exhibition. VR image galleries will offer us something right

in the middle [21].

This concept could take cues from the previous two concepts I've discussed. The viewer could inhabit an art gallery space with images along the walls; the experience could be live whereby the viewer sees avatars of others accessing the media concurrently online. Comments, critiques and other feedback mechanisms could be available. It's worth noting that software applications such as Oculus Social, AltSpaceVR and BigScreen are actively developing functionality similar to what I am describing here, albeit none of those developers have microscopy in mind [22, 23, 24].

At this point you may be wondering, how does this concept relate to photomicrography in particular? It is self-evident that photomicrographs have an aesthetic appeal that stands on its own, independent of academic pursuits. Excellent visual media will always be desired and appreciated across a range of display media, technology and venues. It is just the beginning to import traditional photography into virtual spaces because as one artist writes, “like any new medium, virtual reality is tasked with defining its language…and enter into unexplored territory…there’s a real opportunity to develop a wholly new aesthetic experience.” [25] Photomicrography can evolve with VR if we actively develop and explore it.

Prototyping

a Photomicrograph Application for VR

How close

are we to having a virtual microscope wall application for microscopy

like the one I described earlier? Applications in development include

solutions to displaying traditional, two-dimensional media. Oculus

Video (previously called Oculus Cinema), for example, creates an

environment that simulates sitting in front of a large movie screen

or television within which the user can view Netflix or similar

streaming video content. Watching movies is a passive experience,

meaning that the application simply needs to succeed at simulating a

large-screen viewing experience to the viewer. Sony and Vive

announced similar applications for playing standard, two-dimensional

PlayStation and PC games in VR, respectively. It is not a leap to

introduce basic interface elements or controller inputs to manipulate

the content shown on the virtual wall screen. In essence, the

backbone of a virtual reality microscope “powerwall”

already exists.

In writing this article, I connected with software engineer Peter Le Bek, who was interested in prototyping a virtual microscope solution for VR (if you can craft a catchy, less redundant name that will catch the attention of Silicon Valley angel investors, please let me know). As we talked through user interface ideas and tools, we established some basics for what an application could look like:

--A wall-size image projected in front of the user with zooming and panning interaction

--A browser directory of related slides that can be called onto the main viewing area

--A navigation panel showing an image file in its entirety and the zoomed area’s scope of view

--Annotation and collaboration tools (pointing, measuring, counting, marking, commenting)

--Multi-user support

--An environment that takes advantages of how natural and intuitive it is to “look around”

The images that follow are an initial workshopping of the concept. In fact, this article was nearly complete by the time Peter and I started talking via email. I hope that this provides a visual for many of the points I have made, though screenshots do not do the experience justice. A future article may be in order to demonstrate features and interface design in-depth as we continue to collaborate.

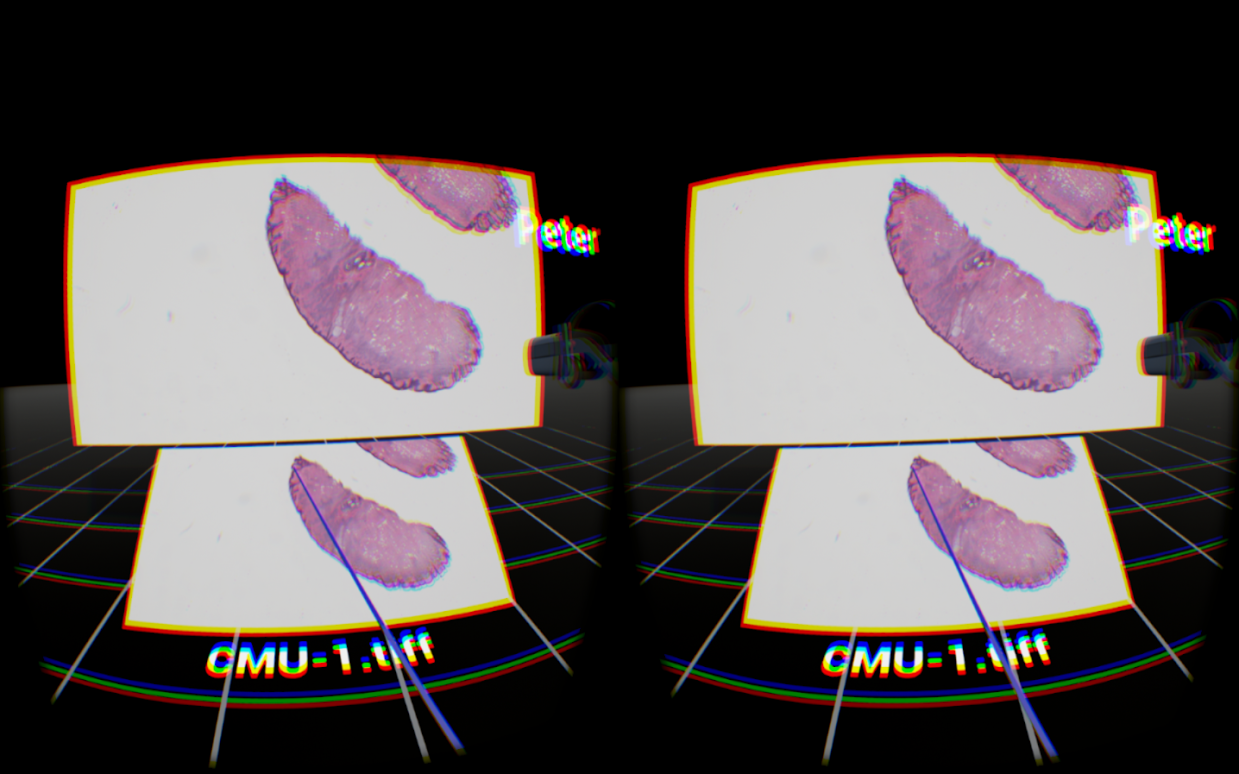

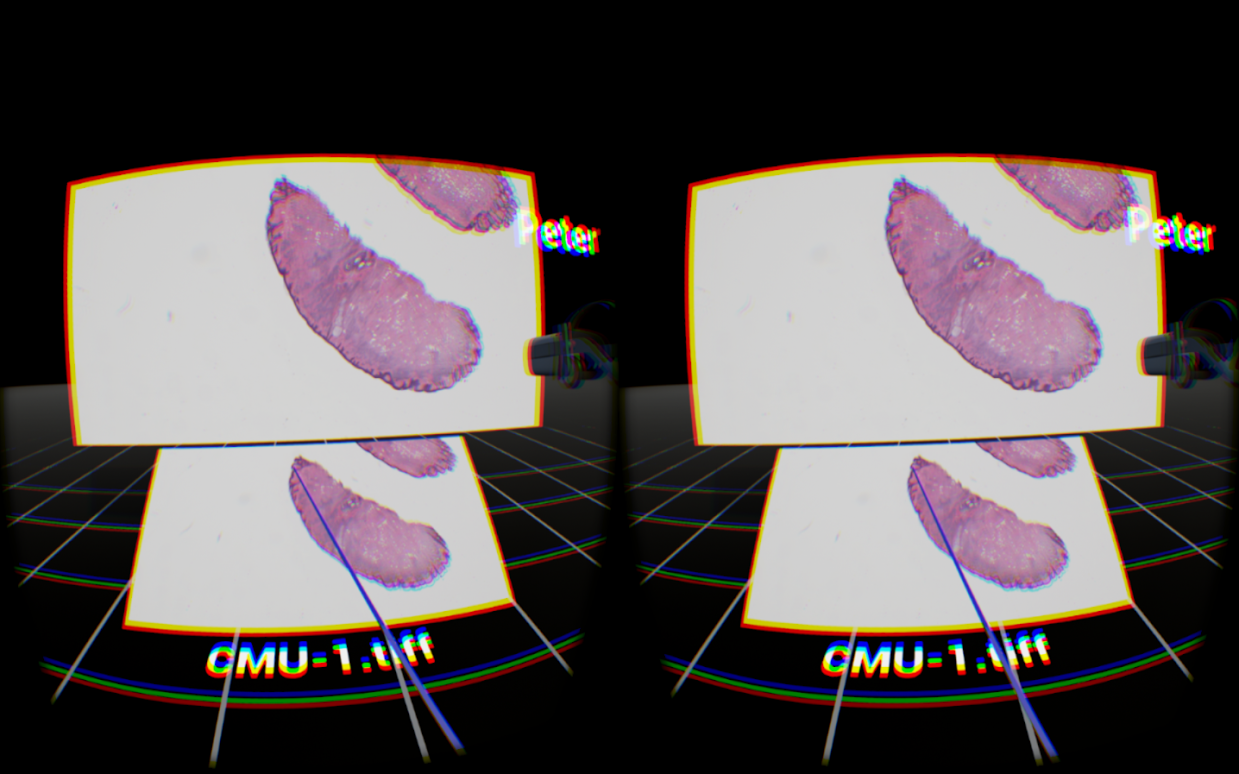

Figure 1: Our prototype, built on the Unreal 4 game engine, has native support for virtual reality hardware. This image shows what the computer renders for the head mounted display—distortion and chromatic aberration are there to counteract the artifacts introduced by the headset optics. Also note the floating HMD model and name tag which represents a second user present in the environment.

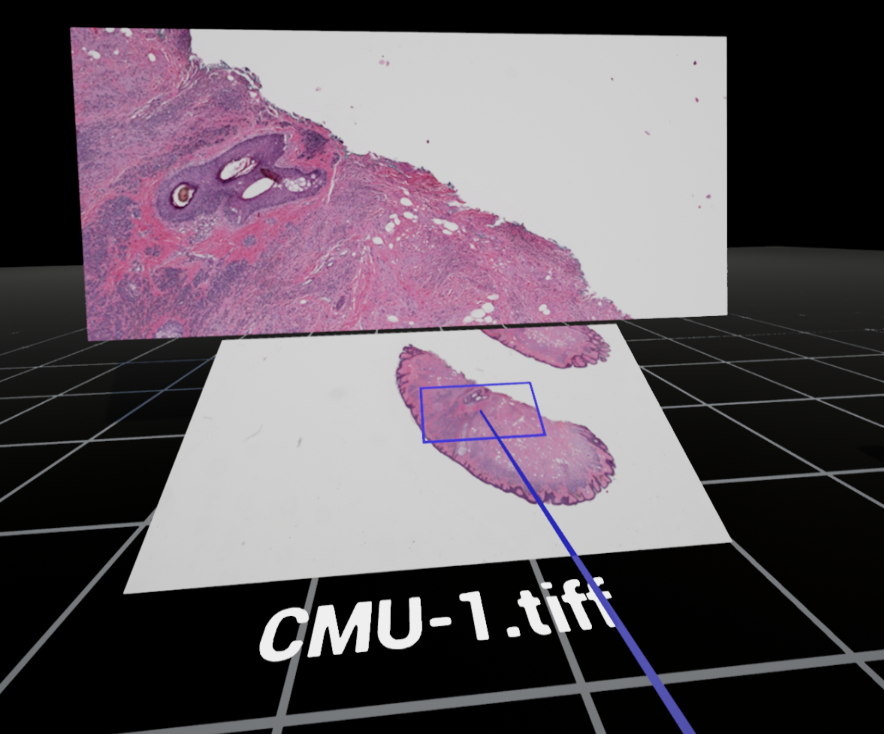

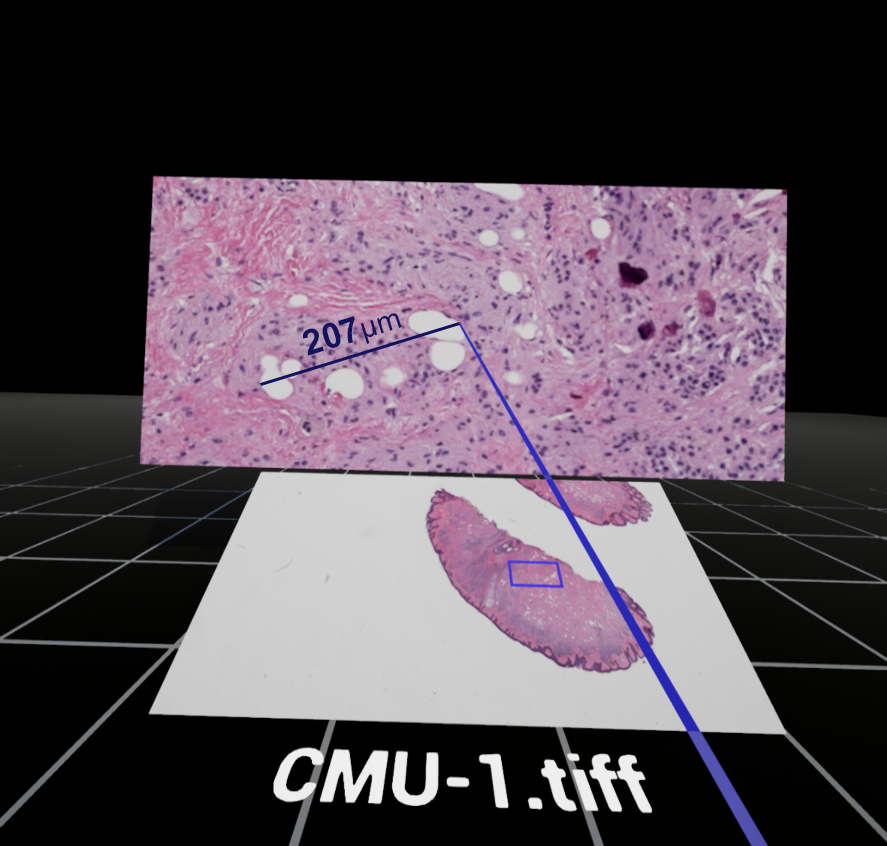

Figure 2: The basic interface uses a whole-slide overview panel slightly below the visual horizon. The main screen directly in front of the user shows the active, magnified view. Moving the blue box around on the overview panel changes the field of view for the main screen, while mouse or controller input allows for dynamic zooming of the image content. A pointer tool, shown here as a blue line, is available to the user to intuitively point, swipe and otherwise indicate in the environment. All present users are able to see one another’s pointers.

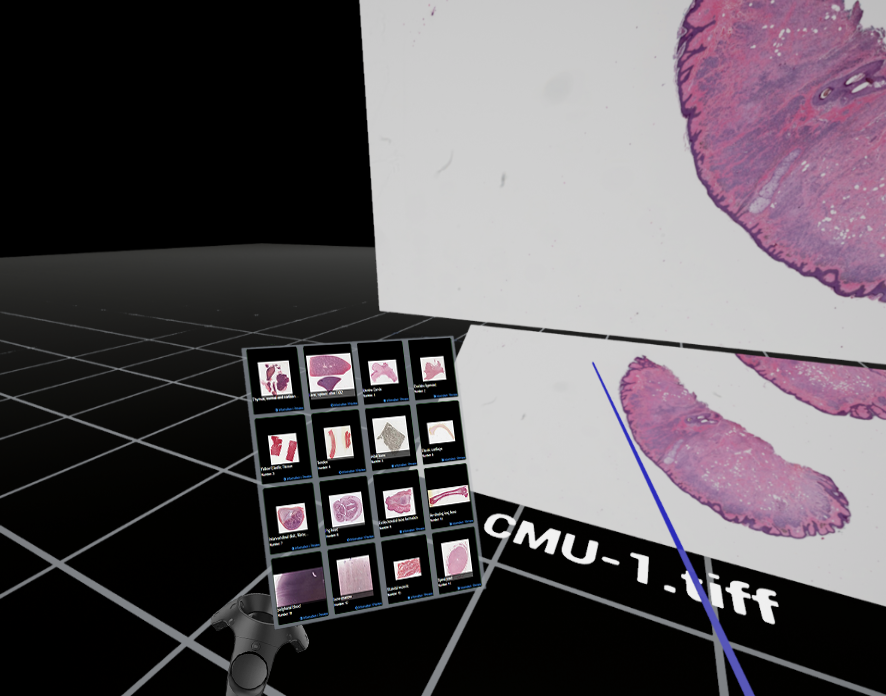

Figure 3: A panel can be toggled to show a thumbnail directory of related whole-slide images for the user to call up onto the main screen. A file name is always present below the overview panel to provide context and supplementary metadata if necessary.

Figure 4: The user can start a measurement with the pointer tool by defining its start and end locations. A ruler line in drawn between the two points and the distance is automatically calculated.

All of this homegrown prototyping has been made possible by the Unreal Engine 4 game engine (which is free for anyone to use), the open source virtual slide C library OpenSlide, and referencing the in-depth research summarized in The Design and Evaluation of Interfaces for Navigating Gigapixel Images in Digital Pathology [26, 27, 28]. We also gleaned insights from The University of Leeds’ documentation of their Pathology Powerwall mentioned earlier.

It is important that the user interface (UI) does not encourage screen thrashing, a term that describes “when users need to make an excessive number of panning/zooming actions to navigate between the data they wish to investigate…even if the application updates the display in real-time, the cognitive lag is considerable because of the number of pan/zoom steps that users have to make. This time-delay impedes users' understanding of their data and discourages exploration.” [29] In other words, the interface should work to limit how often the user has to zoom in, then zoom out to get context, then zoom back in to a new area of interest. This is especially problematic when abrupt and repetitive scene changes lead to motion sickness and eye fatigue in virtual reality headsets. This is one of many challenges a VR photomicrography application faces: UI design’s role in the success of virtual microscopy cannot be overstated [30, 31].

We made huge strides in creating an accessible solution after just a few weeks of collaboration during our nights and weekends. Developing a virtual reality microscope application that is based on freely available, open source tools is not only possible but fundamentally empowering to interdisciplinary, grassroots efforts like ours.

Concluding

Thoughts

Microscopy speeds along in tandem

with scientific and technological breakthroughs. I see advances in

display technology and user interface to be equally as important to

the field as advances in camera sensors or optics. Virtual reality

and the new generation of head mounted displays offer considerable

utility to our passion for imaging the microscopic world, and there

will be a whirlwind series of discoveries and insight garnered once

the microscopy community has access to the hardware. I can think of

nothing more exciting than getting people interested in bringing

photomicrography, and photography as a whole, into our future virtual

spaces.

My own Oculus Rift pre-order will arrive soon. As a gadget enthusiast and visual artist, it is fascinating to consider where we are headed. The admittedly significant barrier for entry right now (computer horsepower, HMD hardware, input devices) will prevent an overnight transformation, but there's so much to discover in the meantime. I’m especially eager to see how creative problem-solvers will unravel the dense ball of yarn that virtual reality offers. I could be wrong about every single idea mentioned here— and that’s okay. But putting optimistic, conceptual ideas out into the world is the first step to seeing them realized. I hope that we will be sharing photomicrographs in virtual reality soon.

Josh Shagam is an image quality engineer and former visiting assistant professor of photographic sciences. He would love to hear from you.

References

1. 8 of the Top 10 Companies in the World are Invested in VR/AR

2. What does virtual reality want to be when it grows up? Three scenarios for the future

3. Microbe Hunter Magazine February 2013

4. The Design and Evaluation of Interfaces for Navigating Gigapixel Images in Digital Pathology

5. UC Davis KeckCAVES, Confocal Microscopy

6. The Virtual Reality Cave: Behind the Scenes at KeckCAVES

7. Toward Routine Use of 3D Histopathology as a Research Tool

8. Virtual Pathology at the University of Leeds

9. Sale of Virtual Microscope Technology

10. From Microscopes to Virtual Reality? How Our Teaching of Histology is Changing

11. OpenSlide: A Vendor-Neutral Software Foundation for Digital Pathology

13. ICC Medical Imaging Working Group

14. Consistency and Standardization of Color in Medical Imaging: A Consensus Report

15. Evaluating Whole Slide Imaging: A Working Group Opportunity

17. The Use of Immersive Virtual Reality in the Learning Sciences

18. Using a High-Resolution Wall-Sized Virtual Microscope to Teach Undergraduate Medical Students

19. Lecture VR, Immersive VR Education

20. The Nuts and Bolts Behind Facebook’s 360-Degree Videos

21. How We Created the First VR Photo Exhibition Featuring the EyeEm Community

22. Oculus Social Alpha Delivers Group Watching to Virtual Reality

23. AltSpaceVR

24. BigScreen VR

25. Virtual Reality is the Most Powerful Artistic Medium of our Time

26. Unreal Engine 4

27. The Design and Evaluation of Interfaces for Navigating Gigapixel Images in Digital Pathology

28. OpenSlide

30. Why Design Matters More than Moore

31. Slide Navigation Patterns Among Pathologists with Long Experience of Digital Review

Further Reading

Why You Should Try That Crazy Virtual Reality Headset

Virtual Reality and Next-Gen Experiences

Beyond Gaming: 10 Other Fascinating Uses for Virtual Reality Tech

VR Will Make Science Even Cooler

From Microscopes to Virtual Reality: How Our Teaching of Histology is Changing

The Design and Evaluation of Interfaces for Navigating Gigapixel Images in Digital Pathology

A New Microscope Uses Virtual Reality to Let You Walk Through Atomic-Level Vistas

When Virtual Reality Meets Education

The Promise of Virtual Reality in Higher Education

Microscopy UK Front

Page

Micscape

Magazine

Article

Library

Published in the May 2016 edition of Micscape Magazine.

Please report any Web problems or offer general comments to the Micscape Editor .

Micscape is the on-line monthly magazine of the Microscopy UK website at Microscopy-UK .

©

Onview.net Ltd, Microscopy-UK, and all contributors 1995

onwards. All rights reserved.

Main site is at

www.microscopy-uk.org.uk .